The landscape of what’s possible within the browser is quietly undergoing a significant shift, and for Google Workspace Add-on developers, this could be a game-changer. Chrome’s AI mission is simple yet powerful: to ‘make Chrome and the web smarter for all developers and all users.’ We’re seeing this vision begin to take shape with the emergence of experimental, built-in AI APIs in Google Chrome, designed to bring powerful capabilities using models like Gemini Nano directly to the user’s device.

There is a growing suite of these on-device APIs. This includes the versatile Prompt API, specialised Writing Assistance APIs (like Summarizer, Writer, and Rewriter), Translation APIs (Language Detector and Translator), and even a newly introduced Proofreader API. For many existing Workspace Add-on developers, some of the more task-specific APIs could offer a relatively straightforward way to integrate AI-powered enhancements.

However, my focus for this exploration, and the core of the accompanying demo Add-on being introduced here, is the Prompt API. What makes this API particularly compelling for me is its direct line to Gemini Nano, a model that runs locally, right within the user’s Chrome browser. This on-device approach means that, unlike solutions requiring calls to external third-party GenAI services, interactions can happen entirely client-side. The Prompt API provides web applications, including Google Workspace Editor Add-ons, with an open-ended way to harness this local AI for text-based generative tasks.

To put the Prompt API’s text processing abilities through its paces in a practical Workspace context, I’ve developed a Google Workspace Add-on focused on text-to-diagram generation. This post delves into this demonstration and discusses what on-device AI, through the versatile Prompt API, could mean for the future of Workspace Add-on development, including its emerging multimodal potential.

Why This Matters: New Horizons for Google Workspace Developers

Using an on-device LLM like Gemini Nano offers several key benefits for Workspace Add-on developers:

- Enhanced Data Privacy & Simplified Governance:Sensitive user data doesn’t need to leave the browser, meaning no external API calls are made to third-party servers for the AI processing itself, which is a huge plus for privacy and can simplify data governance including Google Workspace Marketplace verification and Add-on data privacy policies.

- Potential for Cost-Free GenAI (with caveats!): Client-side processing can reduce or eliminate server-side AI costs for certain tasks. Remember, “Nano” is smaller than its cloud counterparts, so it’s best for well-scoped features. This smaller size means developers should think carefully about their implementation, particularly around prompt design to achieve the desired accuracy, as the model’s capacity for understanding extremely broad or complex instructions without guidance will differ from larger models.

- Improved User Experience & Offline Access:Expect faster interactions due to minimise network latency.

The biggest takeaway here is the opportunity to explore new avenues for GenAI capabilities in your Add-ons, albeit with the understanding that this is experimental territory and on-device models have different characteristics and capacities compared to larger, cloud-based models.

Proof of Concept: AI-Powered Text-to-Diagram Add-on

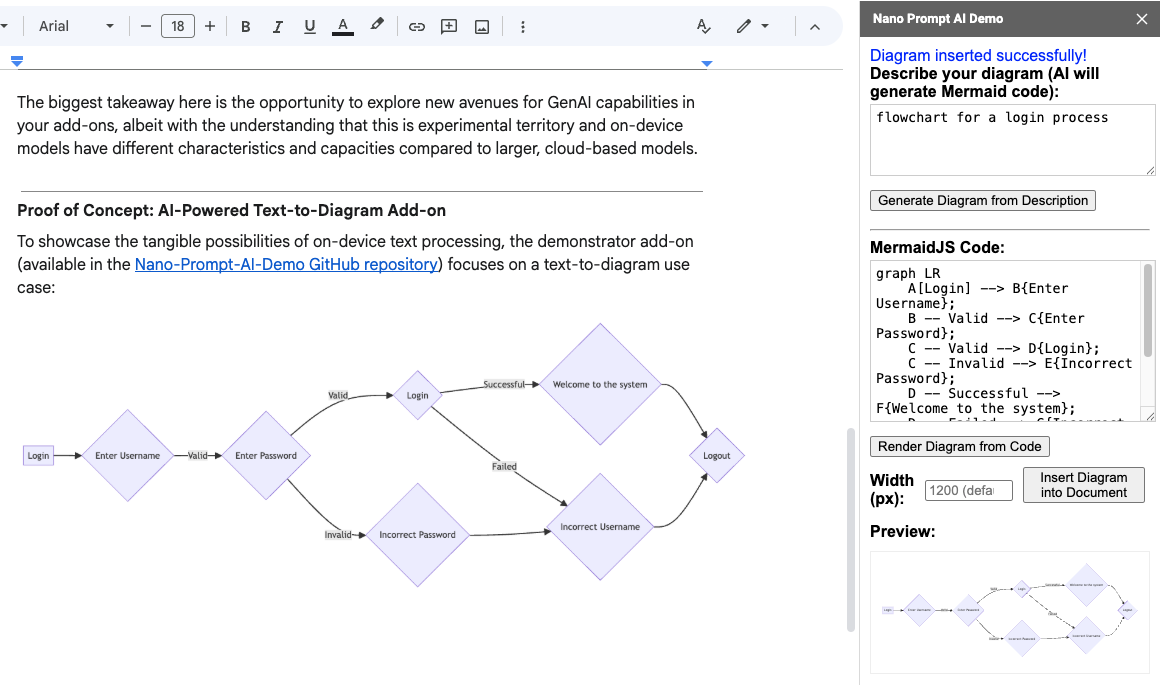

To showcase the tangible possibilities of on-device text processing, the demonstrator Add-on (available in the Nano-Prompt-AI-Demo GitHub repository) focuses on a text-to-diagram use case:

- Users can describe a diagram in natural language (e.g., “flowchart for a login process”).

- The Add-on then uses the Gemini Nano API via the Prompt API to convert this text description into MermaidJS diagram code.

- It also allows users to directly edit the generated MermaidJS code, see a live preview, and utilise an AI-powered “Fix Diagram” feature if the code has errors.

- Finally, the generated diagram can be inserted as a PNG image into their Google Workspace file.

Nano Prompt API Demo

This example illustrates how the Prompt API can be used for practical tasks within a Google Workspace environment.

Under the Bonnet: Utilising the Chrome Gemini Nano Prompt API for Text

The Add-on interacts with Gemini Nano via client-side JavaScript using the LanguageModel object in the Sidebar.html file. I should also highlight that all of the Sidebar.html code was written by the Gemini 2.5 Pro model in gemini.google.com, with my guidance which included providing the appropriate developer documentation and this explainer for the Prompt API.

The Add-on’s core logic for text-to-diagram generation includes:

- Session Creation and Prompt Design for Gemini Nano:A LanguageModel session is created using LanguageModel.create().

- Generating Diagrams from Text: The user’s natural language description is sent to the AI via session.prompt(textDescription).

- AI-Powered Code Fixing: If the generated or manually entered MermaidJS code has errors, the faulty code along with the error message is sent back to the model for attempted correction.

Given that Gemini Nano is, as its name suggests, a smaller LLM, careful prompt design is key to achieving optimal results. In this demonstrator Add-on, for instance, the initialPrompts (system prompt) play a crucial role. It not only instructs the AI to act as a MermaidJS expert and to output onlyraw MermaidJS markdown, but it also includes two explicit examples of MermaidJS code within those instructions.

Providing such “few-shot” examples within the system prompt was found to significantly improve the reliability and accuracy of the generated diagram code from text descriptions. This technique helps guide the smaller model effectively.

Navigating Experimental Waters: Important Considerations (and Reassurances)

It’s important to reiterate that the majority of AI APIs are still experimental. Functionality can change, and specific Chrome versions and flags are often required. I recommend referring to official Chrome AI Documentation and Joining the Early Preview Program for the latest details and updates.

Before you go updating your popular production Google Workspace Add-ons developers should be aware of the current system prerequisites. As of this writing, these include:

- Operating System: Windows 10 or 11; macOS 13+ (Ventura and onwards); or Linux.

- Storage: At least 22 GB of free space on the volume that contains your Chrome profile is necessary for the model download.

- GPU: A dedicated GPU with strictly more than 4 GB of VRAM is often a requirement for performant on-device model execution.

Currently, APIs backed by Gemini Nano do not yet support Chrome for Android, iOS, or ChromeOS. For Workspace Add-on developers, the lack of ChromeOS support is a significant consideration.

However, Google announced at I/O 2025 in the ‘Practical built-in AI with Gemini Nano in Chrome’ session that the text-only Prompt API, powered by Gemini Nano, is generally available for Chrome Extensions starting in Chrome 138. While general web page use of the Prompt API remains experimental this move hopefully signals a clear trajectory from experiment to production-ready capabilities.

Bridging the Gap: The Hybrid SDK

To address device compatibility across the ecosystem, Google has announced a Hybrid SDK. This upcoming extension to the Firebase Web SDK aims to use built-in APIs locally when available and fall back to server-side Gemini otherwise, with a developer preview planned (see https://goo.gle/hybrid-sdk-developer-preview for more information). This initiative should provide a more consistent development experience and wider reach for AI-powered features.

A Glimpse into the Future: Empowering Workspace Innovation

On-device AI opens new opportunities for privacy-centric, responsive, and cost-effective Add-on features. While the demonstrator Add-on focuses on text generation, the Prompt API and the broader suite of on-device AI tools in Chrome offer much more for developers to explore

Focusing on Unique Value for Workspace Add-ons

It’s important for developers to consider how these on-device AI capabilities—be it advanced text processing or new multimodal interactions which support audio and image inputs from Chrome 138 Canary—can be used to extend and enhance user experience in novel ways, rather than replicating core Gemini for Google Workspace features. The power lies in creating unique, value-added functionalities that complement native Workspace features.

Explore, Experiment, and Provide Feedback!

This journey into on-device AI is a collaborative one and Google Workspace developers have an opportunity to help shape on-device AI.

- Explore the Demo: Dive into the Nano-Prompt-AI-Demo GitHub repository to see the text-to-diagram features in action.

- Try It Out: Follow setup instructions to experience on-device AI with the demo, and consider exploring multimodal capabilities for your own projects by referring to the latest Early Preview Program updates.

- Provide Feedback: Share your experiences either about the example add-on or through the Early Preview Program.

I hope you have as much fun working with these APIs as I have and look forward to hearing how you get on. Happy Scripting!

Member of Google Developers Experts Program for Google Workspace (Google Apps Script) and interested in supporting Google Workspace Devs.